Insights

Lexi Collazo

Feb 17, 2026

No alarms go off.

No alerts are triggered.

No policies are technically broken.

An employee pastes a customer email into a generative AI tool to draft a response faster. Another uploads an internal document to summarize key points before a meeting. A third asks an AI assistant to review a piece of code or rewrite a proposal. None of this feels risky. In fact, it feels efficient.

But in each case, sensitive business data leaves the organization without oversight, logging, or clear retention rules.

This is what Shadow AI looks like in practice. It’s not malicious. It’s not even intentional. It’s the unsanctioned use of generative AI tools in everyday workflows, often by well-meaning employees trying to move faster. And it’s quietly creating a new category of data exposure that many SMBs are not prepared to manage.

Unlike traditional Shadow IT, Shadow AI doesn’t always involve installing new software or bypassing IT controls. Most activity happens directly in the browser, using widely available tools that employees already trust. That makes it harder to see, harder to govern, and easier to overlook until sensitive data has already been shared.

For SMBs, this introduces a risk that sits somewhere between productivity and security. For MSPs, it adds another layer of responsibility. Clients expect guidance on how to use AI safely, even when they have no clear policies or controls in place.

Understanding what Shadow AI is, and why it behaves differently from past technology risks, is the first step toward addressing it without blocking innovation.

What Is Shadow AI (and Why It’s Different From Shadow IT)

Shadow AI refers to the use of generative AI tools inside an organization without formal approval, oversight, or governance. In most cases, it happens quietly. Employees use public AI services to draft emails, summarize documents, analyze data, or speed up routine work, often without realizing they are introducing risk.

At first glance, this may look similar to Shadow IT. But the risk profile is very different.

Traditional Shadow IT usually involves unsanctioned applications or services. The concern in visibility, security posture, and integration. Shadow AI, by contrast, is less about the tool itself and more about the data being shared. When users paste content into an AI prompt, upload files, or provide contextual background, that information leaves the organization’s control in ways that are often unclear to both the user and IT.

This is what makes Shadow AI particularly difficult to manage. There is no software installation. No suspicious network traffic. No obvious policy violation from the user’s point of view. Everything happens in a browser, using tools that feel familiar and widely accepted.

Another key difference is data persistence. With many AI services, it’s not always clear how long data is retained, how it’s processed, or whether it’s used to improve models. Even when providers offer enterprise controls, those protections rarely apply to employees using free or personal accounts. For SMBs, this creates a gap between data protection policies and real-world behavior.

Most importantly, Shadow AI is rarely malicious. Employees aren’t trying to bypass controls. They’re trying to be productive. That makes education, visibility, and governance far more effective than simple blocking. Treating Shadow AI as a user behavior problem rather than a technical violation is essential for managing it successfully.

Understanding this distinction is critical. Shadow AI isn’t just another form of Shadow IT. It represents a new way sensitive data can move outside the organization, often without triggering any of the safeguards SMBs and MSPs rely on today.

How Shadow AI Creates Hidden Data Exposure

The real risk of Shadow AI isn’t the tools themselves. It’s the type of information that quietly flows into them during everyday work.

Customer data is often the first to be exposed. Support conversations, sales emails, and account details are pasted into AI tools to draft responses or summarize issues. Even when the intent is harmless, this can include names, contact information, transaction history, or sensitive context that organizations are obligated to protect.

Internal business information follows closely behind. Employees upload proposals, contracts, financial summaries, and internal documentation to improve clarity or save time. These documents often contain pricing details, partner agreements, or strategic information that was never meant to leave the organization's control.

Technical data presents another layer of risk. Developers and IT staff regularly use AI to troubleshoot errors or review code. In doing so, they may share proprietary logic, system architecture details, API keys, or internal references that provide a much deeper view into how systems are built and secured.

What makes this exposure particularly challenging is how incremental it is. No single prompt feels significant. Each interaction seems small and reasonable on its own. Over time, however, these fragments of data accumulate across multiple tools, accounts, and users, creating a broad and unmanaged data footprint outside the organization.

For SMBs, this can translate into compliance violations, contractual breaches, or loss of intellectual property without any clear triggering event. For MSPs, it introduces a risk that is difficult to explain after the fact, especially when there was no obvious incident to point to.

Shadow AI doesn’t create one dramatic failure. It creates many quiet ones, spread across daily workflows that were never designed with data governance in mind.

Why SMBs Are Especially Vulnerable

Shadow AI exists everywhere, but its impact isn’t evenly distributed. Small and midsize businesses are exposed in ways larger organizations are often better equipped to manage, even when they face similar usage patterns.

Limited Governance Around AI Use

Most SMBs don’t have formal policies governing how generative AI tools should be used. In many cases, leadership has encouraged experimentation without defining boundaries, or has simply not addressed AI usage at all. This leaves employees to make judgement calls about what data is appropriate to share, often without understanding the downstream consequences.

Without clear guidance, Shadow AI becomes the default rather than the exception.

Productivity Pressure Over Policy Awareness

Employees at SMBs are often asked to move quickly and take on multiple roles. AI tools feel like a natural extension of that expectation. Drafting faster, summarizing quicker, and automating routine work are seen as responsible behavior, not risky behavior.

Because Shadow AI aligns with productivity goals, it rarely triggers the same caution as installing unapproved software or bypassing IT controls. Speed wins over scrutiny.

Lack of Visibility Into Everyday Data Handling

SMBs typically lack visibility into how data is handled once it leaves core systems. There are few controls around browser-based workflows, limited monitoring of AI usage, and little insight into what information is being shared outside approved platforms.

This makes Shadow AI difficult to assess even after concerns arise. By the time questions are asked, data has already moved beyond the organization’s reach.

Heavy Reliance on MSPs for Security Decisions

Most SMBs rely on MSPs to define and enforce security practices. As Shadow AI introduces a new category of data risk, clients naturally look to their MSPs for answers. They expect guidance on what is acceptable, what is not, and how AI can be used safely without slowing work down.

This places MSPs in a challenging position. They are expected to manage a risk that is driven by user behavior, spans multiple tools, and lacks clear technical boundaries.

The Resulting Risk Gap

The combination of unclear policies, strong productivity incentives, and limited visibility creates a gap between how SMBs believe data is protected and how it actually moves into practice. Shadow AI widens that gap quietly, without triggering the warning signs organizations are used to watching for. For SMBs, this increases the likelihood of accidental exposure. For MSPs, it introduces a responsibility that can’t be addressed with traditional controls alone.

The MSP Challenge: Visibility Without Killing Productivity

For MSPs, Shadow AI introduces a difficult balancing act. Clients want the benefits of generative AI, but they also expect their data to remain protected. Simply blocking AI tools is rarely an option. It works slow, frustrates users, and often pushes usage further into the shadows rather than eliminating it.

The real challenge is visibility. MSPs are expected to understand how AI tools are being used across client environments, even when that activity doesn’t flow through traditional systems. Shadow AI operates at the edges of visibility, woven into everyday workflows that MSPs were never designed to monitor directly.

At the same time, MSPs must support clients with very different risk profiles. A creative agency, a healthcare provider, and a financial services firm may all use generative AI, but the data they handle and the regulations they face are not the same. A single, rigid policy doesn’t work across every client.

Traditional security approaches also struggle in this space. Endpoint controls don’t capture browser-based interactions effectively. Network-based controls lack context about what data is being shared. Even data loss prevention tools can fall short when AI usage is embedded in normal business activity rather than a clear violation.

This puts MSPs in a position where they are accountable for outcomes without having straightforward levers to pull. They need ways to guide AI usage, apply boundaries, and understand risk without introducing friction that undermines productivity.

Solving this problem requires a shift in how AI usage is governed. Visibility, context, and user behavior matter as much as blocking or detection. For MSPs, the goal is not to eliminate Shadow AI, but to bring it into a managed, observable, and safer operating model.

Governance Best Practices for Shadow AI

Effective governance of Shadow AI doesn’t start with restriction. It starts with clarity. SMBs and MSPs need shared expectations around how generative AI can be used, what data is appropriate to share, and where guardrails are necessary.

Define Acceptable and Prohibited AI Use

The first step is establishing clear guidance. Employees should understand which AI use cases are encouraged, which are allowed with caution, and which are not permitted at all. This is especially important for data types such as customer information, financial records, intellectual property, and regulated data.

Classify Data Before Governing Tools

AI governance is most effective when it focuses on data rather than tools. Instead of trying to block or approve individual AI platforms, organizations should define what categories of data should never be shared with external AI services.

When users understand that certain data types are off-limits regardless of the tool, governance becomes easier to explain and enforce.

Educate Users on Real-World Risk

Most Shadow AI behavior is driven by productivity, not negligence. Training should focus on realistic scenarios rather than abstract rules. Explaining how small prompts can expose sensitive context helps users make better decisions without feeling restricted.

Education turns governance into a shared responsibility rather than an enforcement problem.

Apply Controls at the Identity and Access Layer

Because Shadow AI usage often occurs through normal login sessions, identity-based controls play an important role. Context-aware authentication, device trust, and access policies can help limit risky activity without blocking legitimate work.

When access decisions consider who the user is, what device they are using, and where they are connecting from, AI usage can be managed more intelligently.

Monitor Patterns Instead of Blocking Innovation

Rather than attempting to eliminate Shadow AI, MSPs should focus on visibility and trends. Monitoring usage patterns over time helps identify risky behavior, gaps in understanding, and opportunities for police refinement.

This approach allows organizations to adapt as AI tools evolve, without relying on static rules that quickly become outdated.

How MSPs Can Turn Shadow AI Into a Managed Security Opportunity

Shadow AI is often framed purely as risk, but for MSPs it also represents an opportunity to expand their role from reactive support to proactive guidance. As clients adopt generative AI faster than policies can keep up, many SMBs are looking for help defining what safe usage actually looks like.

This creates pace for MSPs to step in with structured, ongoing services, rather than one-time recommendations.

Position AI Governance as an Ongoing Service

AI usage is not a project with a clear end state. Tools change, capabilities evolve, and employee behavior adapts quickly. MSPs can package AI governance as a managed service that includes policy definition, periodic reviews, and adjustments as client needs change.

This reframes Shadow AI from a one-off problem into a continuous responsibility that benefits from expert oversight.

Help Clients Translate Policy Into Practice

Many SMBs are willing to approve AI usage in principle but struggle to operationalize it. MSPs can bridge this gap by helping clients turn high-level guidance into enforceable controls. This includes aligning AI usage rules with identity policies, access controls, and device requirements that already exist in the environment.

By connecting governance to day-to-day workflows, MSPs help ensure policies are followed in practice, not just documented.

Provide Visibility That Builds Trust

Clients often underestimate how widely AI tools are being used inside their organizations. Providing visibility into usage patterns, trends, and potential risk areas allows MSPs to have informed conversations based on real behavior rather than assumptions.

This visibility supports more productive discussions with leadership and reinforces the MSP’s role as a trusted advisor rather than a blocker.

Create Repeatable Models Across Clients

Shadow AI affects nearly every SMB, even if the specifics differ by industry. MSPs can develop standardized approaches to AI governance that can be tailored per client without being rebuilt from scratch each time. This improves efficiency while maintaining flexibility.

Over time, this repeatability allows MSPs to scale AI-related services without significantly increasing operational overhead.

Strengthen Long-Term Client Relationships

When MSPs help clients adopt AI safely, they become involved in how the business operates, not just how it’s secured. That deeper integration increases trust and reduces churn. AI governance becomes another area where the MSP’s value compounds over time rather than being replaced by a point solution.

Shadow AI may introduce new risks, but it also opens the door for MSPs to expand their relevance in a rapidly changing technology landscape.

Identity as the Control Plane for Shadow AI

Managing Shadow AI requires more than written policies. It requires controls that operate where AI usage actually happens and that scale across users, devices, and applications without disrupting work.

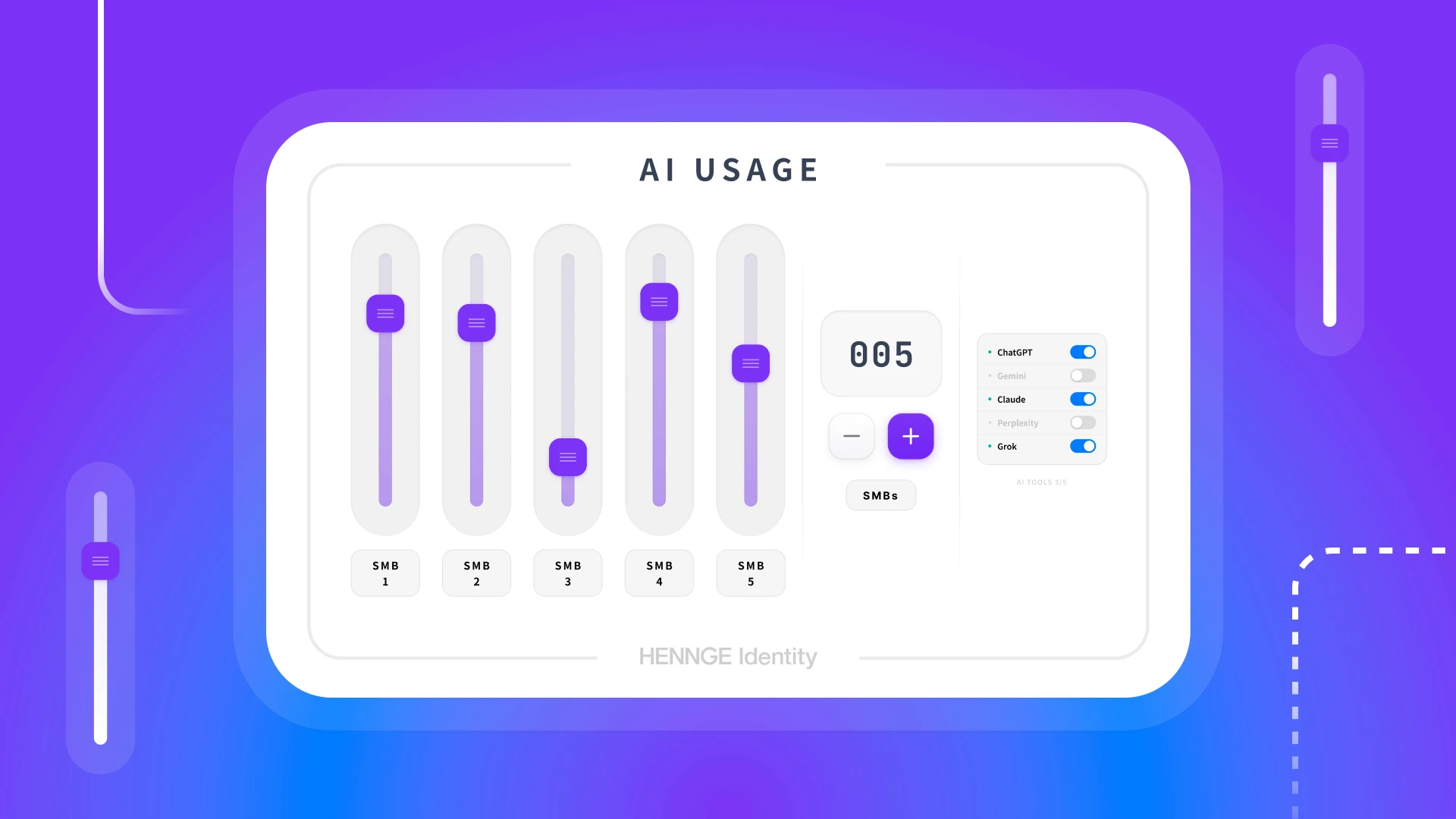

HENNGE Identity provides an identity-first foundation that helps MSPs introduce governance without relying on heavy-handed restrictions. By applying access controls at the identity and browser level, MSPs can guide how users interact with AI tools while keeping productivity intact.

Context-aware access policies allow AI usage to be evaluated based on who the user is, what device they are using, and how they are connecting. Browser-level controls help limit risky actions, such as uploading sensitive files or copying protected data, without blacking access entirely. Together, these capabilities make it possible to manage Shadow AI as a part of a broader identity and access strategy rather than a standalone problem.

For MSPs, this approach supports consistent governance across clients with different risk profiles. For SMBs, it provides guardrails that reduce accidental exposure without discouraging innovation.

Shadow AI is already a part of everyday work at SMBs. The risk is not that employees are using AI, but that they are doing so without guidance, visibility, or safeguards. As generative AI becomes more deeply embedded in business workflows, MSPs have an opportunity to help clients adopt it responsibly and protect sensitive data along the way. We’ll continue exploring emerging risks like Shadow AI and how MSPs can address them in future articles. To stay informed, subscribe to our newsletter. If you’d like to learn how HENNGE can support your MSP practice, feel free to contact us anytime.